Welcome back. As I mentioned in Part I, the second part of my look back at the Open will deal more with the results of this year's Open. Much like in Part I, I'm also going to be putting things in perspective by making some comparisons to past years.

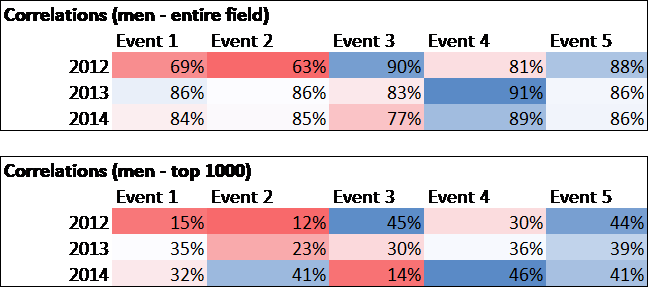

So let's start part II by looking at the event correlations, which is one way to judge how effective each workout is for measuring overall fitness (see "Are Certain Events 'Better' Than Others" from 2012 for more explanation). The charts below show, for the 2012-2014 men's* Opens, the correlation between each event and the sum of ranks for all other workouts in that season. Remember, correlations range from -100% to 100%, with 100% meaning that a higher rank on an event always indicates a higher rank across other events and 0% meaning that there was no relationship whatsoever between the rank on an event and the rank across other events.

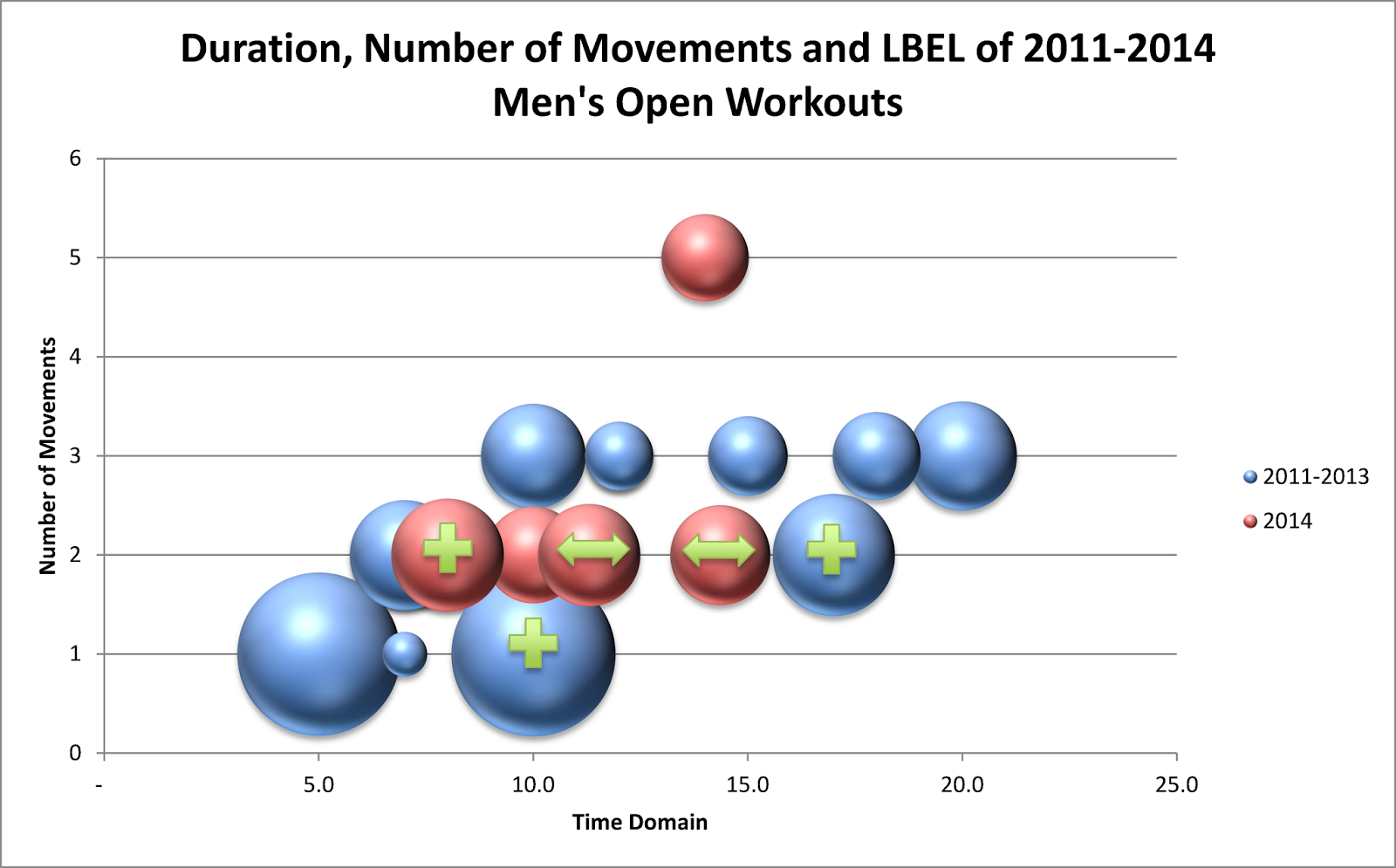

Not surprisingly, event 14.4 was most correlated with overall success this year (both looking at the entire field and the top 1,000 only). We've seen in the past that events with more movements typically have higher correlations, although there have been several couplets with high correlations (13.4 for instance). Note that two of the weakest correlations were for 12.1 and 12.2, both single-modalities. We also notice that 14.3 had a low correlation, which probably doesn't come as a shock to most of us who follow the sport closely. That event basically boiled down to a heavy deadlift workout, and consequently we saw relative unknowns like Steven Platek end up in the top 10 worldwide.

For those unfamiliar with with what the concept is, below is a visual representation. The first chart shows the relationship between 14.3 rank and the sum of all other ranks, and the second charts shows the relationship between 14.4 rank and the sum of all other ranks. Notice how the bunching is tighter for 14.4 - there are fewer athletes who did well on 14.4 but did not do well otherwise (these would be dots in the top left) and fewer athletes who struggled on 14.4 but did well otherwise (these would be dots in the bottom right).

The correlations were generally lower this year than in 2013, although they were higher than in 2012. Lower correlations aren't necessarily "bad" - the fact that 2014 had lower correlations than 2013 is partly due to the fact that the workouts were more varied, which I personally liked. I think they struck a nice balance this year between not having any events that were too specialized (like 12.1 or 12.2), but not having the same athletes finishing at the top of each event (which occurred to some extent in 2013). I think this was reflected in the point totals needed to qualify for regionals each year: in the Central East for example, the 60th place competitor scored 589 points in 2014, compared with 450 in 2013 (with approximately 65% of the competitors of 2014) and 508 in 2012 (with approximately 30% of the competitors of 2014). More variety in the events means that athletes can afford more points and still reach regionals.

For those unfamiliar with with what the concept is, below is a visual representation. The first chart shows the relationship between 14.3 rank and the sum of all other ranks, and the second charts shows the relationship between 14.4 rank and the sum of all other ranks. Notice how the bunching is tighter for 14.4 - there are fewer athletes who did well on 14.4 but did not do well otherwise (these would be dots in the top left) and fewer athletes who struggled on 14.4 but did well otherwise (these would be dots in the bottom right).

The correlations were generally lower this year than in 2013, although they were higher than in 2012. Lower correlations aren't necessarily "bad" - the fact that 2014 had lower correlations than 2013 is partly due to the fact that the workouts were more varied, which I personally liked. I think they struck a nice balance this year between not having any events that were too specialized (like 12.1 or 12.2), but not having the same athletes finishing at the top of each event (which occurred to some extent in 2013). I think this was reflected in the point totals needed to qualify for regionals each year: in the Central East for example, the 60th place competitor scored 589 points in 2014, compared with 450 in 2013 (with approximately 65% of the competitors of 2014) and 508 in 2012 (with approximately 30% of the competitors of 2014). More variety in the events means that athletes can afford more points and still reach regionals.

Let's move on to a comparison of performance between new athletes and continuing athletes. The charts below show the average percentile rank (0% being first place, 100% being last place) of athletes in each event, split between athletes who finished all 5 events in 2013 and those that either did not compete in 2013 or did not finish all events.

Like last year, the returning athletes did fare much better than the newcomers, and the difference was consistent across all events (the gap is nearly identical to last year). This shouldn't come as a surprise. In fact, if we extend this further and look at athletes who competed all the way back in 2011, we find that they finished in approximately the top 25% for men and 20% for women.

This leads us to probably the most interesting analysis I have in this post: a comparison of 11.1 and 14.1. Last year, when I looked at 13.3 vs. 12.4, I found that overall, the field performed nearly identically across the two years. However, when we isolated this to athletes who competed in both years, we saw a significant improvement in 13.3.

So what about 14.1 vs. 11.1? First, I compared the results across all athletes who finished all 5 events in either year. Interestingly, the average score in 2014 was approximately 10% lower for both men and women when we look at the entire field. This supports my belief that the Open didn't really become "inclusive" until 2012, when HQ put a lot more effort into convincing the community that everyone could and should participate in the Open. The 2011 Open was intimidating also: event 11.3 required male athletes to be able to squat clean 165 and female athletes to be able to squat clean 110, or else they would get a DNF.

Below is a graph showing the distribution of scores in 11.1 and 14.1 for all women who finished all events in either year. The x-axis is shown in terms of stations completed, not total reps, because this accounts for the fact that each round has twice as many double-unders as snatches. A score here of 10 stations is equal to 5 full rounds or 225 reps. Note that the 11.1 distribution is skewed to the right, indicating more athletes with high scores.

Like last year, however, this only tells part of the story. If we limit our analysis to athletes who finished all events in both years, we see the improvement we expected. Female athletes who competed in both years scored approximately 23% higher in 2014, male athletes who competed in both years scored approximately 14% higher, and both men and women averaged an impressive 283 reps in 2014. Below is a graph showing the distribution of scores in 11.1 and 14.1 for women who finished all 5 events in both years. Now you can see that the 14.1 distribution is skewed to the right.

Another thing I looked into was the percentage of athletes who finished the workout on the double-unders. I used this as a proxy to see how the community has improved on the double-unders. The idea is that for athletes who are competent on double-unders, that station will be much shorter than the snatches, thus we should see fewer athletes finishing on the double-unders if the athletes are stronger in that area. I found the following:

- Men (entire field) - 2011: 50.7%, 2014: 47.4%

- Women (entire field) - 2011: 50.0%, 2014: 49.9%

- Men (competed both years) - 2011: 49.3%, 2014: 41.3%

- Women (competed both years) - 2011: 47.8%, 2014: 41.2%

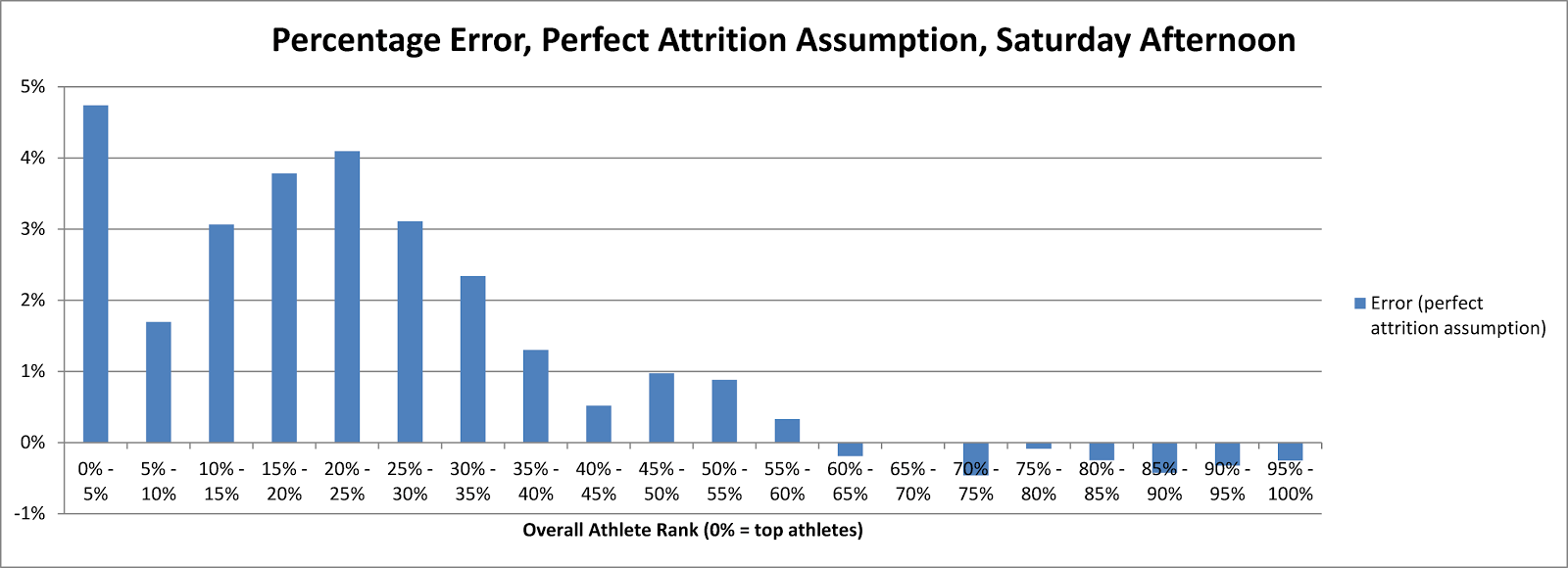

Finally, I took a look at the attrition from week-to-week this season. The chart below shows how the field declined each week over the past four seasons. To reduce clutter, I averaged the results for men and women each season.

Again, we see that things shifted pretty significantly after 2011. As I mentioned earlier, I believe that there was a much smaller percentage of "casual" Open participants in 2011 than we see today. The chart above supports that. Fewer athletes dropped off that season because the athletes who chose to sign up were generally more committed to competing for the long-haul.

Since then, the men's and women's field has finished up with between 59% and 64% of those that completed event 1. The percentage dropping off each week has varied between 4% and 19%, averaging out to approximately 11%**.

Well that's it for today. I've covered a lot in these past two posts, but at the same time I think there is plenty more work that can be done with the Open data, especially now that I have all four years of Open data to play around with and compare. That being said, I think it's shift the focus to Regionals, so I'll see you all again in a few weeks.

*The women's correlations are very similar.

**11% would probably be a good place to start for the attrition estimate needed to do the mid-week overall projections (described in recent posts).

**11% would probably be a good place to start for the attrition estimate needed to do the mid-week overall projections (described in recent posts).

.png)

.png)